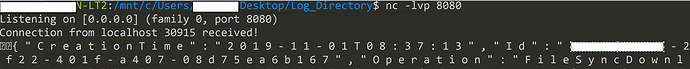

I am using filebeat to read a file which is getting logs in JSON format. It is putting one JSON object per line.

Here is a copy of one of the items from a line of the text file being watched by FileBeat:

{"CreationTime":"2019-11-01T08:32:17","Id":"xxxxxxx-1534-4539-a7e9-xxxxxf","Operation":"FileSyncDownloadedPartial","OrganizationId":"exxxxx-xxx-xxxxxxxxb","RecordType":6,"UserKey":"i:0h.f|membership|10xxxxxxxxxxxxxxd@live.com","UserType":0,"Version":1,"Workload":"OneDrive","ClientIP":"xxx.xxx.x66","ObjectId":"https:\/\/nxxxxxxxxxxxxxy.sharepoint.com\/personal\/sxxxxxxe_com\/Documents\/4xxxxxxxxxy\/HOWxxxxxxxxxx\/Euxenxxxxtxxxx6.pdf","UserId":"xxxxxxxxxxxxx@xxxxx.com","CorrelationId":"4xxxxxxxxxxxx632359fb88c8","EventSource":"SharePoint","ItemType":"File","ListId":"8cxxxx-x-xxxxxx-x24","ListItemUniqueId":"638xxxxxxxx-x-xxxxxxx-xxxx55","Site":"34xxxxxxxx-xxxxx-xxxxxx-ade21","UserAgent":"Microsoft Office Upload Center 2014 (16.0.4849) Windows NT 6.1","WebId":"6863xxxxxxxxxx-xxxxx-xxxxxxxx8245","SourceFileExtension":"pdf","SiteUrl":"https:\/\/xxxxxxxxxxxxxxxx.sharepoint.com\/personal\/xxxxxxxxxx_com\/","SourceFileName":"Excxxxxxxxxxxxxxxx.pdf","SourceRelativeUrl":"Dxxxxxxxxxx\/4Rexxxxxxxxxxx\/HOxxxxxxxxxxxxx"}

My Filebeat Config for this endpoint is using the following:

output.logstash:

hosts: ["graylog.xxxxxxxxxxx.com:9876"]

path:

data: C:\Program Files\Graylog\sidecar\cache\filebeat\data

logs: C:\Program Files\Graylog\sidecar\logs

tags:

- windows

filebeat.inputs:

- paths:

- C:\Users\xxxxxxxx\Desktop\Log_Directory\AuditRecords.txt

input_type: log

type: log

#Ignore these, was testing earlier.

# json.keys_under_root: true

#filebeat.processors:

# - decode_json_fields:

# fields: ['message']

# target: json

# json.keys_under_root: true

# json.add_error_key: true

# json.ignore_decoding_error: true

# json.message_key: message

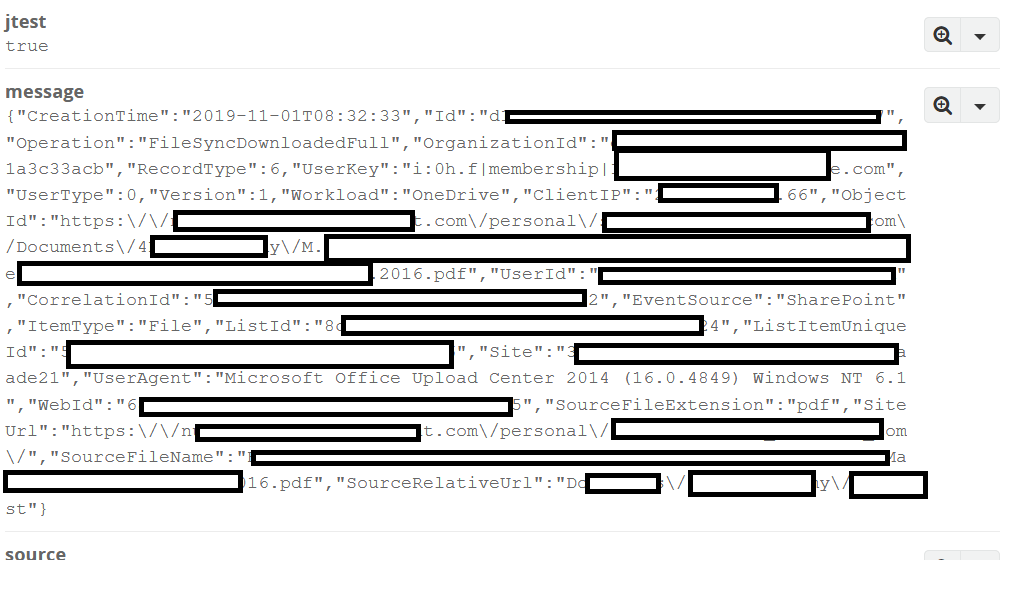

This is properly (seemingly) creating messages where “$message.message” matches the JSON of the line, here’s an example of what ends up in Graylog:

However, I’ve yet to find a good way to actually extract the JSON from $message.message and have it create the appropriate fields. Not all messages have the same syntax but they are all json…so I can’t do a manual set of extractors but I’m not finding a way to solve this.

An example of a pipeline I’ve tried is the following (which adds the jtest:true item in the screenshot above):

rule "extract O365 data from JSON"

when

contains(to_string($message.filebeat_source),"C:\\Users\\xxxxxx\\Desktop\\Log_Directory\\AuditRecords.txt",true)

then

let json_data = parse_json(to_string($message.message));

let jmap = to_map(json_data);

let jtest = is_json(json_data);

set_field("jtest",jtest);

set_fields(jmap);

end

Any help or guidance would be EXTREMELY appreciated.