Hi Tomas

Thank you for getting back to me.

The datanodes and graylog servers are directly deployed. 3 Graylog servers and 3 datanodes in total.

I followed the Ubuntu Installation: Multiple Graylog Nodes guide. It doesn’t let me list the link for some reason sorry. The installation is exactly how its recommended in the guide.

All datanodes work individually but not together. All ports required are accessible from every machine in the cluster and on the network. Both via DNS and IP addresses. Datanodes can access the mongodb replica without any issues.

I currently haven’t implemented any special security features, just what’s on the multiple graylog node installation.

The configuration for datanodes is the following:

#####################################

# GRAYLOG DATANODE CONFIGURATION FILE

#####################################

#

# This is the Graylog DataNode configuration file. The file has to use ISO 8859-1/Latin-1 character encoding.

# Characters that cannot be directly represented in this encoding can be written using Unicode escapes

# as defined in Chapter 3. Lexical Structure, using the \u prefix.

# For example, \u002c.

#

# * Entries are generally expected to be a single line of the form, one of the following:

#

# propertyName=propertyValue

# propertyName:propertyValue

#

# * White space that appears between the property name and property value is ignored,

# so the following are equivalent:

#

# name=Stephen

# name = Stephen

#

# * White space at the beginning of the line is also ignored.

#

# * Lines that start with the comment characters ! or # are ignored. Blank lines are also ignored.

#

# * The property value is generally terminated by the end of the line. White space following the

# property value is not ignored, and is treated as part of the property value.

#

# * A property value can span several lines if each line is terminated by a backslash (‘\’) character.

# For example:

#

*# targetCities=*

*# Detroit,*

*# Chicago,*

# Los Angeles

#

# This is equivalent to targetCities=Detroit,Chicago,Los Angeles (white space at the beginning of lines is ignored).

#

# * The characters newline, carriage return, and tab can be inserted with characters \n, \r, and \t, respectively.

#

# * The backslash character must be escaped as a double backslash. For example:

#

# path=c:\docs\doc1

#

# The auto-generated node ID will be stored in this file and read after restarts. It is a good idea

# to use an absolute file path here if you are starting Graylog DataNode from init scripts or similar.

node_id_file = /etc/graylog/datanode/node-id

# location of your data-node configuration files - put additional files like manually created certificates etc. here

config_location = /etc/graylog/datanode

opensearch_heap = 8g

# You MUST set a secret to secure/pepper the stored user passwords here. Use at least 64 characters.

# Generate one by using for example: pwgen -N 1 -s 96

# ATTENTION: This value must be the same on all Graylog and Datanode nodes in the cluster.

# Changing this value after installation will render all user sessions and encrypted values in the database invalid. (e.g. encrypted access tokens)

password_secret = [redacted]

# The default root user is named ‘admin’

#root_username = admin

# You MUST specify a hash password for the root user (which you only need to initially set up the

# system and in case you lose connectivity to your authentication backend)

# This password cannot be changed using the API or via the web interface. If you need to change it,

# modify it in this file.

# Create one by using for example: echo -n yourpassword | sha256sum

# and put the resulting hash value into the following line

root_password_sha2 =

# connection to MongoDB, shared with the Graylog server

# See Connection Strings - Database Manual - MongoDB Docs for details

mongodb_uri = mongodb://10.13.18.1:27017,10.13.18.2:27017,10.13.18.3:27017/graylog?replicaSet=glset0

#### HTTP bind address

#

# The network interface used by the Graylog DataNode to bind all services.

#

bind_address = 0.0.0.0

#### Hostname

#

# if you need to specify the hostname to use (because looking it up programmatically gives wrong results)

hostname = [hostname of datanode]

#### HTTP port

#

# The port where the DataNode REST api is listening

#

# datanode_http_port = 8999

#### HTTP publish URI

#

# This configuration should be used if you want to connect to this Graylog DataNode’s REST API and it is available on

# another network interface than $http_bind_address,

# for example if the machine has multiple network interfaces or is behind a NAT gateway.

# http_publish_uri =

#### OpenSearch HTTP port

#

# The port where OpenSearch HTTP is listening on

#

# opensearch_http_port = 9200

#### OpenSearch transport port

#

# The port where OpenSearch transports is listening on

#

# opensearch_transport_port = 9300

#### OpenSearch node name config option

#

# use this, if your node name should be different from the hostname that’s found by programmatically looking it up

#

node_name = [name of the node]

#### OpenSearch discovery_seed_hosts config option

#

# if you’re not using the automatic data node setup and want to create a cluster, you have to setup the discovery seed hosts

#

# opensearch_discovery_seed_hosts =

#### OpenSearch initial_manager_nodes config option

#

# if you’re not using the automatic data node setup and want to create a cluster, you have to setup the initial manager nodes

# make sure to remove this setting after the cluster has formed

#

#initial_cluster_manager_nodes = gldatanode1-dcde2

node_roles = data,ingest,remote_cluster_client

#### OpenSearch folders

#

# set these if you need OpenSearch to be located in a special place or want to include an existing version

#

# Root directory of the used opensearch distribution

opensearch_location = /usr/share/graylog-datanode/dist

opensearch_config_location = /var/lib/graylog-datanode/opensearch/config

opensearch_data_location = /var/lib/graylog-datanode/opensearch/data

opensearch_logs_location = /var/log/graylog-datanode/opensearch

#### OpenSearch Certificate bundles for transport and http layer security

#

# if you’re not using the automatic data node setup, you can manually configure your SSL certificates

# transport_certificate = datanode-transport-certificates.p12

# transport_certificate_password = password

# http_certificate = datanode-http-certificates.p12

# http_certificate_password = password

#### OpenSearch log buffers size

#

# the number of lines from stderr and stdout of the OpenSearch process that are buffered inside the DataNode for logging etc.

#

# process_logs_buffer_size = 500

#### OpenSearch JWT token usage

#

# communication between Graylog and OpenSearch is secured by JWT. These are the defaults used for the token usage

# adjust them, if you have special needs.

#

# indexer_jwt_auth_token_caching_duration = 60s

# indexer_jwt_auth_token_expiration_duration = 180s

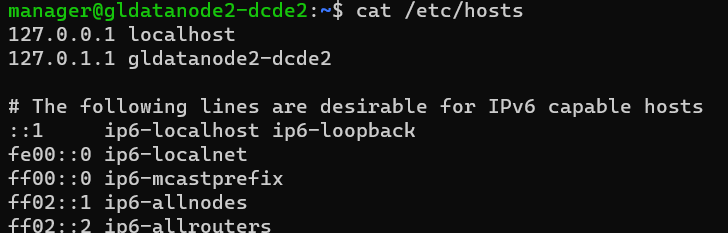

There are no hostname / ip / mac address duplicates in configuration or in DNS entries.