Hello Graylog and Community,

I come from a background in leveraging an event logging and analytics product that starts with an S and requires sacrifice of a kidney to afford. I have been looking for open source alternatives that intend to disrupt the space and just generally iterate and get better and better over time. I have seen ELK and its okay, but I wanted a more ootb easier to maintain solution with great documentation that also has an open core approach with driven engineers but also has an enterprise following big enough to pay the bills and keep the lights on(and hopefully they are pro open source too!). I like a team running the project that believes in open source and enjoys a community of users acting as great QA and even helping build and PR awesome functionality once familiarized with the application. I get the feeling Graylog fits into this and I am very excited to try and dig into it a bit and POC it potentially in the near future. I do have a few questions, I think many are not super repetitive with older searching but forgive me if they are and link me to an old post if possible.

I work in the API and API Gateway space so most of my questions are geared around that

The some features I am looking for out of the open-source are:

- Programmatic dash-boarding: Say a customer calls tons of API’s against an API Gateway technology. Can for every new Customer I have on the gateway a programmatic dashboard be made in Graylog(based on json/xml or something posted to an endpoint) that will create a dashboard that customer can later view on a unique URL path(that is predictable) for things like what API’s they call, maybe HTTP status codes they are seeing etc.

I see info here: http://docs.graylog.org/en/3.0/pages/dashboards.html , which visually tells me how to do it all. But say I make 1 dashboard visually(maybe it exports and JSON/XML right) and at that point there is 1 parameter I would like to use to define tons of custom dashboards(maybe by consumer username) to give each consumer a unique dashboard showcasing their API Experience. Then an API endpoint by graylog can be exposed and I POST that JSON fomat with a different consumer name embedded in and BAM I get an awesome dashboard! In the works? New idea not been considered?

Edit - Ooo Found the REST API Docs page - https://docs.graylog.org/en/3.1/pages/configuration/rest_api.html#graylog-rest-api , which tells me I need to install it and start browsing the swagger page lol, guess that keeps you from having to maintain all the things it supports directly in the docs  . I’ll keep my fingers crossed that dashboard control is present and the UI is just interacting with the API to produce dashboards!

. I’ll keep my fingers crossed that dashboard control is present and the UI is just interacting with the API to produce dashboards!

-

LDAP control for admins vs read rights users. - Answering this one myself, seems a resounding yes, go Graylog!

-

HTTP intake endpoint accepting JSON format - Yay I do see it here:

https://docs.graylog.org/en/3.1/pages/gelf.html#example-payload

My follow up question, is much like other solutions, does Graylog allow “batching” of these JSON messages to send multiple messages in a single call? Like:

{ ... }{ ... }{ ... }{ ... }

Not a hard requirement but sure would be nice if I could send say 10-20 messages in a single call since they are gonna not be too big.

Edit - Ahh see it here, no dice on multiple messages, the PR has kinda died off once it was mentioned a single JSON payload prepared by client and server having to read into memory is dangerous: https://github.com/Graylog2/graylog2-server/pull/5924 , although its still not a breaking change from existing behavior or if a single payload was huge anyways. One of those features that if you use it then understand the risks. I would say a 20kb or so batched log payload isn’t that big in todays world  . Avg API size calls I see in the wild are around 9kb. Cool to know I can fork and use this PR though if I want batched support right now in a structure like:

. Avg API size calls I see in the wild are around 9kb. Cool to know I can fork and use this PR though if I want batched support right now in a structure like:

[

{"version": "1.1", "host": "..."},

{"version": "1.1", "host": "..."},

{"version": "1.1", "host": "..."}

]

Which is actually more conformant that our existing solution that does invalid JSON but essentially multiple json messages like the earlier sample I gave above.

Also it seems the JSON structure Graylog accepts is only flat JSON if I am just going by the sample?

Something like:

{

"Tries":[{"balancer_latency":0,"port":443,"balancer_start":1570940875143,"ip":"10.xxx.xxx.xxx"},...]

}

Would not work right? I could also flatten it too to be like tries1,2,3,4,5 max as flat design but would be nice to support a more complex . - Yeah seems I can answer my own Q here actually: https://github.com/Graylog2/graylog2-server/issues/5945 , and well since I am in control of the JSON payload I send fully I can flatten everything out  , no major issues here but I think enabling structured JSON will help take Graylog to new heights!

, no major issues here but I think enabling structured JSON will help take Graylog to new heights!

-

TLS / Auth for HTTP log intake : Easy enough, in my mind I will throw a Kong gateway side car to serve TLS endpoint and provide key auth or oauth2/jwt auth and reverse proxy localhost to the GELF endpoint. Huzzah!

-

User Experience: Say I use the HTTP JSON logging, in the UI when browsing JSON events is Graylog smart enough to natively pretty print that JSON to be pleasingly viewable?

-

How advanced does the querying get? I understand I kinda select fields and do various operations on them. Can graylog essentially do any of these?

Basic Wildcard searching on values.

index=XXX URI=*myurl/resource/endpoint1* OR URI=*myurl/resource/endpoint2*

Piping searches to produce basic visuals, like the below would make a bar chart of HTTP Statuses

index=XXX URI=*myurl/resource/endpoint1* OR URI=*myurl/resource/endpoint2* | chart count by HTTPStatus

Lastly something like

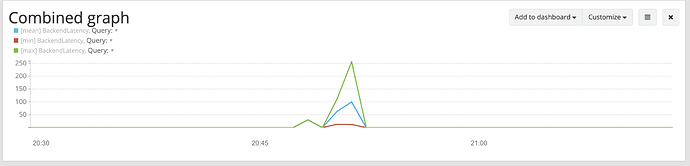

index=XXX URI=*myurl/resource/endpoint1* OR URI=*myurl/resource/endpoint2* | timechart p50(BackendLatency) p95(BackendLatency) p99(BackendLatency)

Which would yield p50/95/99 pretty time chart over time to review latency results visually.

Of course I don’t need a queryable language like so but can Graylog in its own unique way produce the same end results is the important part. Also assuming things like greater than/less than operands can be supported on numeric JSON value elements too etc(hopefully correct  ).

).

-

Performance: Say I am generating about 200 mil tx a day, max tps around 3000 TPS. Does this seem in the ballpark of something the open-core HTTP event logging can handle? Log message size is around 600-800 bytes per message. Anyone leveraging grayscale that might be able to ballpark if such volume could be handled on a single beefy CentOS/RHEL VM with X CPU , X RAM , X Disk for the graylog, elastic, and mongo? That is like 0.16 TB of data per day. I would like a retention period of 3 months as a start.

-

RESTFul management / Data Retrieval: My understanding is there is a REST API to pull event logged data too from things or get aggregate details on a response? So like is it possible to have all events logged, then do a REST query on the event data like:

- Count of transactions over time for all consumers of the API

- Count of transaction over time for a specific consumer of an API

- P50/95/99 latency perf of the API

Will keep digging and edit any of these posts if I answer my own questions too, there is certainly a ton to comb over. Looking forward to getting involved!

Thanks,

Jeremy