Hi @jan,

Thanks for your comments.

I am sure there is no problem in ES. If I kill one graylog server instance and, If I run input only on the one node, then I can see all the logs.

like you recommended I tried logging in to the WEB UI of each machine and I am able to login without any issues using their IP:9000 format.

But If I use through LB, (hostname), then I am getting an following error

I verified all MongoDB instances and each running perfectly with no issues. Primary, Secondary and Arbitrary assumes their role correctly.

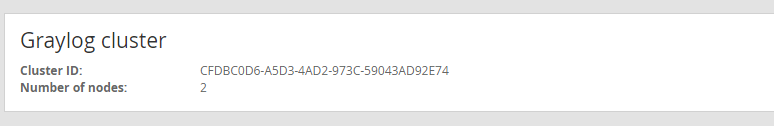

whole snapshot shows that both Graylog Cluster and ES Cluster running fine.

ES Curl Query:

{

**"cluster_name" : "elastic-graylog-cluster",**

** "version" : 12,**

** "state_uuid" : "Pytuw-ocRAGebXhdj0edXw",**

** "master_node" : "X-EnoXqQQPm1kAb-DlN_jQ",**

** "blocks" : { },**

** "nodes" : {**

** "iCUthA65RUOxFx3BcUdN2w" : {**

** "name" : "es-graylog-node2",**

** "transport_address" : "10.12.86.75:9300",**

** "attributes" : { }**

** },**

** "X-EnoXqQQPm1kAb-DlN_jQ" : {**

** "name" : "es-graylog-node1",**

** "transport_address" : "10.12.86.74:9300",**

** "attributes" : { }**

** },**

** "LZqfGi3PSme7O52I-5Tt4g" : {**

** "name" : "graylog-51bdd951-e7ac-41ef-bdc2-543514f40b60",**

** "transport_address" : "10.12.86.74:9350",**

** "attributes" : {**

** "client" : "true",**

** "data" : "false",**

** "master" : "false"**

** }**

** }**

},

"metadata" : {

"cluster_uuid" : "7hvbe9W4Tj22aURgU97c_w",

"templates" : {

"graylog-internal" : {

"template" : "graylog_*",

"order" : -1,

"settings" : {

"index" : {

"analysis" : {

"analyzer" : {

"analyzer_keyword" : {

"filter" : "lowercase",

"tokenizer" : "keyword"

}

}

}

}

},

"mappings" : {

"message" : {

"_source" : {

"enabled" : true

},

"dynamic_templates" : [ {

"internal_fields" : {

"mapping" : {

"index" : "not_analyzed",

"type" : "string"

},

"match" : "gl2_*"

}

}, {

"store_generic" : {

"mapping" : {

"index" : "not_analyzed"

},

"match" : "*"

}

} ],

"properties" : {

"full_message" : {

"analyzer" : "standard",

"index" : "analyzed",

"type" : "string"

},

"streams" : {

"index" : "not_analyzed",

"type" : "string"

},

"source" : {

"analyzer" : "analyzer_keyword",

"index" : "analyzed",

"type" : "string"

},

"message" : {

"analyzer" : "standard",

"index" : "analyzed",

"type" : "string"

},

"timestamp" : {

"format" : "yyyy-MM-dd HH:mm:ss.SSS",

"type" : "date"

}

}

}

}

}

},

"indices" : {

"graylog_0" : {

"state" : "open",

"settings" : {

"index" : {

"creation_date" : "1504888203538",

"analysis" : {

"analyzer" : {

"analyzer_keyword" : {

"filter" : "lowercase",

"tokenizer" : "keyword"

}

}

},

"number_of_shards" : "4",

"number_of_replicas" : "0",

"uuid" : "R2Im6rBAQiO5wIiKu0ocWA",

"version" : {

"created" : "2040699"

}

}

},

"mappings" : {

"message" : {

"dynamic_templates" : [ {

"internal_fields" : {

"mapping" : {

"index" : "not_analyzed",

"type" : "string"

},

"match" : "gl2_*"

}

}, {

"store_generic" : {

"mapping" : {

"index" : "not_analyzed"

},

"match" : "*"

}

} ],

"properties" : {

"full_message" : {

"analyzer" : "standard",

"type" : "string"

},

"level" : {

"type" : "long"

},

"gl2_remote_ip" : {

"index" : "not_analyzed",

"type" : "string"

},

"gl2_remote_port" : {

"index" : "not_analyzed",

"type" : "string"

},

"streams" : {

"index" : "not_analyzed",

"type" : "string"

},

"gl2_source_node" : {

"index" : "not_analyzed",

"type" : "string"

},

"source" : {

"analyzer" : "analyzer_keyword",

"type" : "string"

},

"gl2_source_input" : {

"index" : "not_analyzed",

"type" : "string"

},

"message" : {

"analyzer" : "standard",

"type" : "string"

},

"facility" : {

"index" : "not_analyzed",

"type" : "string"

},

"timestamp" : {

"format" : "yyyy-MM-dd HH:mm:ss.SSS",

"type" : "date"

}

}

}

},

"aliases" : [ "graylog_deflector" ]

}

}

},

"routing_table" : {

"indices" : {

"graylog_0" : {

"shards" : {

"2" : [ {

"state" : "STARTED",

"primary" : true,

"node" : "X-EnoXqQQPm1kAb-DlN_jQ",

"relocating_node" : null,

"shard" : 2,

"index" : "graylog_0",

"version" : 22,

"allocation_id" : {

"id" : "QKPTwga-TEG5VhERida2Xg"

}

} ],

"1" : [ {

"state" : "STARTED",

"primary" : true,

"node" : "iCUthA65RUOxFx3BcUdN2w",

"relocating_node" : null,

"shard" : 1,

"index" : "graylog_0",

"version" : 24,

"allocation_id" : {

"id" : "eoftdncZSmO7jUykWXWtzQ"

}

} ],

"3" : [ {

"state" : "STARTED",

"primary" : true,

"node" : "iCUthA65RUOxFx3BcUdN2w",

"relocating_node" : null,

"shard" : 3,

"index" : "graylog_0",

"version" : 24,

"allocation_id" : {

"id" : "LVDgW3wRRnyCZnYT2EX8hA"

}

} ],

"0" : [ {

"state" : "STARTED",

"primary" : true,

"node" : "X-EnoXqQQPm1kAb-DlN_jQ",

"relocating_node" : null,

"shard" : 0,

"index" : "graylog_0",

"version" : 22,

"allocation_id" : {

"id" : "epTALwAOTMyOxE0O5edfOQ"

}

} ]

}

}

}

},

"routing_nodes" : {

"unassigned" : [ ],

"nodes" : {

"X-EnoXqQQPm1kAb-DlN_jQ" : [ {

"state" : "STARTED",

"primary" : true,

"node" : "X-EnoXqQQPm1kAb-DlN_jQ",

"relocating_node" : null,

"shard" : 2,

"index" : "graylog_0",

"version" : 22,

"allocation_id" : {

"id" : "QKPTwga-TEG5VhERida2Xg"

}

}, {

"state" : "STARTED",

"primary" : true,

"node" : "X-EnoXqQQPm1kAb-DlN_jQ",

"relocating_node" : null,

"shard" : 0,

"index" : "graylog_0",

"version" : 22,

"allocation_id" : {

"id" : "epTALwAOTMyOxE0O5edfOQ"

}

} ],

"iCUthA65RUOxFx3BcUdN2w" : [ {

"state" : "STARTED",

"primary" : true,

"node" : "iCUthA65RUOxFx3BcUdN2w",

"relocating_node" : null,

"shard" : 1,

"index" : "graylog_0",

"version" : 24,

"allocation_id" : {

"id" : "eoftdncZSmO7jUykWXWtzQ"

}

}, {

"state" : "STARTED",

"primary" : true,

"node" : "iCUthA65RUOxFx3BcUdN2w",

"relocating_node" : null,

"shard" : 3,

"index" : "graylog_0",

"version" : 24,

"allocation_id" : {

"id" : "LVDgW3wRRnyCZnYT2EX8hA"

}

} ]

}

}

}

From MongoDB:

rs0:PRIMARY> show databases;

admin 0.000GB

graylog 0.001GB

graylog2 0.001GB

local 0.033GB

rs0:PRIMARY> use graylog2;

switched to db graylog2

rs0:PRIMARY> show tables;

alarmcallbackconfigurations

alarmcallbackhistory

alerts

cluster_config

cluster_events

collectors

content_packs

grok_patterns

index_failures

index_ranges

index_sets

inputs

nodes

notifications

pipeline_processor_pipelines

pipeline_processor_pipelines_streams

pipeline_processor_rules

roles

sessions

streamrules

streams

system_messages

users

rs0:PRIMARY>

Node 1:

GRAYLOG Config:

is_master = true

node_id_file = /etc/graylog/server/node-id

password_secret = YrjGI2RF1k0Q2qYBXXXXXXXXXXXXXXXXYYYYYYYYYFwz3vcveIGgXpyUrEf8za5chZTtZoaz5

root_password_sha2 = 7676aaafb027c8jhfskjhsdfhsdkfjh2752f625b752e55e55b48e607e358

plugin_dir = /usr/share/graylog-server/plugin

rest_listen_uri = http://10.12.86.74:9000/api/

web_listen_uri = http://10.12.86.74:9000/

web_endpoint_uri = http://testgraylog.testdomain.com:9000/api/

rotation_strategy = count

elasticsearch_max_docs_per_index = 20000000

elasticsearch_max_number_of_indices = 20

retention_strategy = delete

elasticsearch_shards = 4

elasticsearch_replicas = 0

elasticsearch_index_prefix = graylog

allow_leading_wildcard_searches = false

allow_highlighting = false

elasticsearch_cluster_name = elastic-graylog-cluster

elasticsearch_discovery_zen_ping_multicast_enabled = false

elasticsearch_discovery_zen_ping_unicast_hosts = 10.12.86.74:9300, 10.12.86.75:9300

elasticsearch_network_host = 10.12.86.74

elasticsearch_analyzer = standard

output_batch_size = 500

output_flush_interval = 1

output_fault_count_threshold = 5

output_fault_penalty_seconds = 30

processbuffer_processors = 5

outputbuffer_processors = 3

processor_wait_strategy = blocking

ring_size = 65536

inputbuffer_ring_size = 65536

inputbuffer_processors = 2

inputbuffer_wait_strategy = blocking

message_journal_enabled = true

message_journal_dir = /var/lib/graylog-server/journal

lb_recognition_period_seconds = 3

mongodb_uri = mongodb://mongodb-primary:27017,mongodb-secondary:27017,mongodb-arbit:27017/graylog2

mongodb_max_connections = 1000

mongodb_threads_allowed_to_block_multiplier = 5

content_packs_dir = /usr/share/graylog-server/contentpacks

content_packs_auto_load = grok-patterns.json

proxied_requests_thread_pool_size = 32

ES Config:

cluster.name: elastic-graylog-cluster

node.name: es-graylog-node1

path.data: /esdata

bootstrap.memory_lock: true

network.host: 10.12.86.74

discovery.zen.ping.multicast.enabled: false

discovery.zen.ping.unicast.hosts: ["10.12.86.74:9300", "10.12.86.75:9300"]

MongoDB1 Config:

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

journal:

enabled: true

processManagement:

fork: true # fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile

net:

port: 27017

bindIp: 10.12.86.74

replication:

replSetName: rs0

Node 2:

GRAYLOG Config:

is_master = false

node_id_file = /etc/graylog/server/node-id

password_secret = YrjGI2RF1k0Q2qYBXXXXXXXXXXXXXXXXYYYYYYYYYFwz3vcveIGgXpyUrEf8za5chZTtZoaz5

root_password_sha2 = 7676aaafb027c8jhfskjhsdfhsdkfjh2752f625b752e55e55b48e607e358

plugin_dir = /usr/share/graylog-server/plugin

rest_listen_uri = http://10.12.86.75:9000/api/

web_listen_uri = http://10.12.86.75:9000/

web_endpoint_uri = http://testgraylog.testdomain.com:9000/api/

rotation_strategy = count

elasticsearch_max_docs_per_index = 20000000

elasticsearch_max_number_of_indices = 20

retention_strategy = delete

elasticsearch_shards = 4

elasticsearch_replicas = 0

elasticsearch_index_prefix = graylog

allow_leading_wildcard_searches = false

allow_highlighting = false

elasticsearch_cluster_name = elastic-graylog-cluster

elasticsearch_discovery_zen_ping_multicast_enabled = false

elasticsearch_discovery_zen_ping_unicast_hosts = 10.12.86.74:9300, 10.12.86.75:9300

elasticsearch_network_host = 10.12.86.75

elasticsearch_analyzer = standard

output_batch_size = 500

output_flush_interval = 1

output_fault_count_threshold = 5

output_fault_penalty_seconds = 30

processbuffer_processors = 5

outputbuffer_processors = 3

processor_wait_strategy = blocking

ring_size = 65536

inputbuffer_ring_size = 65536

inputbuffer_processors = 2

inputbuffer_wait_strategy = blocking

message_journal_enabled = true

message_journal_dir = /var/lib/graylog-server/journal

lb_recognition_period_seconds = 3

mongodb_uri = mongodb://mongodb-primary:27017,mongodb-secondary:27017,mongodb-arbit:27017/graylog2

mongodb_max_connections = 1000

mongodb_threads_allowed_to_block_multiplier = 5

content_packs_dir = /usr/share/graylog-server/contentpacks

content_packs_auto_load = grok-patterns.json

proxied_requests_thread_pool_size = 32

ES Config:

cluster.name: elastic-graylog-cluster

node.name: es-graylog-node2

path.data: /esdata

bootstrap.memory_lock: true

network.host: 10.12.86.75

discovery.zen.ping.multicast.enabled: false

discovery.zen.ping.unicast.hosts: ["10.12.86.74:9300", "10.12.86.75:9300"]

MongoDB2 Config:

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

journal:

enabled: true

processManagement:

fork: true # fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile

net:

port: 27017

bindIp: 10.12.86.75 # Listen to local interface only, comment to listen on all interfaces.

replication:

replSetName: rs0

MongoDB Arbit:

systemLog:

destination: file

logAppend: true

path: /var/log/mongodb/mongod.log

storage:

dbPath: /var/lib/mongo

journal:

enabled: true

processManagement:

fork: true # fork and run in background

pidFilePath: /var/run/mongodb/mongod.pid # location of pidfile

net:

port: 27017

bindIp: 10.12.86.76 # Listen to local interface only, comment to listen on all interfaces.

replication:

replSetName: rs0

HAProxy Config:

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

listen stats 10.12.86.74:12230

mode http

log global

maxconn 10

clitimeout 100s

srvtimeout 100s

contimeout 100s

timeout queue 100s

stats enable

stats hide-version

stats refresh 30s

stats show-node

stats auth admin:password

stats uri /haproxy?stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

# Frontend WEB Redirection

frontend graylog_http

bind *:80

option forwardfor

http-request add-header X-Forwarded-Host %[req.hdr(host)]

http-request add-header X-Forwarded-Server %[req.hdr(host)]

http-request add-header X-Forwarded-Port %[dst_port]

acl is_graylog hdr_dom(host) -i -m str testgraylog.testdomain.com

use_backend graylog_be_webserver if is_graylog

backend graylog_be_webserver

description Backend Web Servers

balance roundrobin

option httpchk HEAD /api/system/lbstatus

http-request set-header X-Graylog-Server-URL http://testgraylog.testdomain.com/api

server graylog1 10.12.86.74:9000 maxconn 20 check

server graylog2 10.12.86.75:9000 maxconn 20 check

# Log Load Balancing

frontend graylog_tcp

bind *:12221

mode tcp

default_backend log_pools

backend log_pools

balance roundrobin

mode tcp

server graylog-logging1 10.12.86.74:12222

server graylog-logging2 10.12.86.75:12222

This is my current running Config, and seems I am not able to locate the mistake that I committed