yep… 400 000 line into gelf input and WITH this regexp it got stuck in buffer.

Without it is stored in full and graylog does not even blink - message field maybe is not indexed, line is 440 KB long LOL…

There is no question that condition is a runaway regex. You can plug your regex (below) into a test at www.regex101.com and even a small (well… 34k…) text file will timeout from catastrophic backtracking (I had several posts suggesting this is the issue… ![]() ) …When I take out the Negative Lookahead (

) …When I take out the Negative Lookahead (?!…) portion it doesn’t time out but the regex has to step over two and a half million times for a 34k text file that has no matches.

^((?!xxx|xxx|xxx|xxx|xxx).)$*

--

^((?!docId|took|filename|journalLines|Journal).)*$

You definitely want to find/fail quicker than that I don’t know enough about the variety of data coming in to help with efficiency… that would be another post/topic anyway -

I’d like to post my favorite link about regex again: Regexploit It will explain you, why just greedy data is not suitable for your case.

@ihe - my favorite catastrophic backtracking link: Runaway Regular Expressions: Catastrophic Backtracking ![]()

@anonimus - If you are working in pipelines, you could set cascading rules with Stages. Meaning that in stage one you could flag messages by type (when message starts with xxx then set field mess_type: xxx) then in stage two you can take action(s) based on the mess_type flag (when mess_type == xxx …) you can get more complicated than that of course but the objective is to optimize actions for throughput … I imagine this is why they didn’t include loops…

Let us know what you come up with!!

Going over to the devs with the lucille, talk heart to heart… ![]()

Lucille was very , very persuasive

Out with the old… replaced with the regular includes sting…

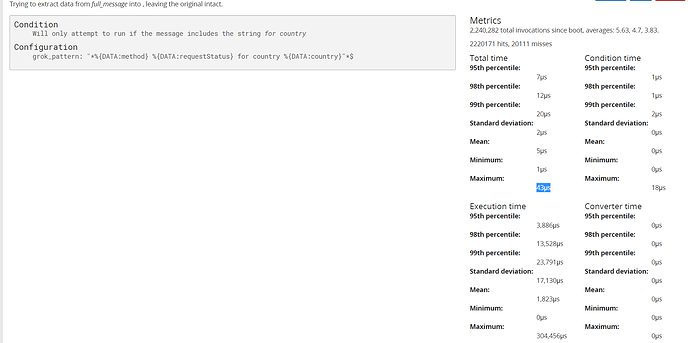

Trying to extract data from full_message into , leaving the original intact.

Condition

- Will only attempt to run if the message includes the string for country

Configuration - grok_pattern: "%{DATA:method} %{DATA:requestStatus} for country %{DATA:country}"$

Magic!

Still need to test, but looks like all other extractors now… no negative whatever, just simple grep.

I hope it works!

FYI: the technology underlying GROK is regex. and even in the search where you are ONLY searching for for country it is likely regex being used. The GROK definition for DATA is generally .*? which is the “lazy” version of .*… which is one of the the things that causes race conditions.

*? - matches the previous token (in your case with DATA - . which is ‘anything’) between zero and unlimited times, as few times as possible, expanding as needed (lazy)

I had a test file of 35 million characters/283,800 lines and it took 651,482 steps to find for country … assuming it’s found you then have to find it again with two groups of DATA (.*?) separated by a space in front of it… regex101 times out for me on that. Every group of things it finds has to be checked twice until it gets to for country

Optimally in large files you don’t want to search everywhere, you would want to make a decision quickly as to what actions to take. and the first actions to take should maximize the reduction of things the next action needs to do.

You may be bound to your data so it may be a moot issue.

New extractor patterns do work - 400 000 characters in single line, no issues, nothing stuck in buffer.

So TLDR - negative grep, lookahead regexp patterns - BAD ![]()

Thanks all!

This topic was automatically closed 14 days after the last reply. New replies are no longer allowed.